Businesses and academics have joined forces inside an £800, 000 project to extend the so-called internet of things straight into the classroom.

In the programme, distance, the consortium for furthering education through advanced technologies, aims to develop “the internet of school things” with eight UK schools.

In the programme, distance, the consortium for furthering education through advanced technologies, aims to develop “the internet of school things” with eight UK schools.

at

08:06

The university of Cambridge informally referred to firmly as Cambridge university or merely as Cambridge could be a public research university located in Cambridge, England, united kingdom. it will be the second-oldest university within the english-speaking world ( once the university of oxford ), and also the third-oldest surviving university on earth. the mission of one's university of Cambridge often to contribute to society across the pursuit of education, learning, and research at the best international levels of excellence.

Overview

Text mining, refers to the process of taking high-quality information of text. High-quality information is usually obtained through a pattern forecasting and trends through means such as statistical pattern learning. Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of some among others, and subsequent insertion into a database), determine the pattern in structured data, and finally evaluate and interpret the output. 'High quality' in text mining usually refers to some combination of relevance, novelty, and interestingness.

The university of nottingham is famed because of its tutorial prowess, research capabilities and an attention-grabbing gig by your clash within the whole seventies that led to actually the punk outfit being banned from student unions for several years.

Other then despite boasting alumni ranging from d h lawrence to actually the founding father of wetherspoons, the university is about wanting forward instead of back – and its it department is no exception.

Other then despite boasting alumni ranging from d h lawrence to actually the founding father of wetherspoons, the university is about wanting forward instead of back – and its it department is no exception.

Consumers are a very important asset for a company. There will be no business without any prospects of relationship with the consumers who are loyal. This is the reason why the company should plan and use the strategy that is quite clear in treating consumers. Customer Relationship Management (CRM) has grown in recent decades to reflect the major role of consumers for setting corporate strategy. CRM includes all sizes to understand consumers and processes to exploit this knowledge to design and implement the marketing activities, production and supply chain of suppliers (suppliers). The following will CRM is defined some terms are taken from the literature, among others (Tama, 2009):

Reseller ComputaCenter has raised its game on diversity by adding its first female board member out to its previously all-male board of directors. Regine Stachelhaus recently served just like a membership owner management board at e. on from June 2010 out to July 2013 and likewise served as its chief human resources officer till July 2013.

Support Vector Machines (SVMs) are a set of supervised learning methods that analyze data and recognize patterns, used for classification and regression analysis. Original SVM algorithm invented by Vladimir Vapnik and the current standard derivative (soft margin) was proposed by Corinna Cortes and Vladimir Vapnik (Cortes, C. and Vapnik, V, 1995).

Overview

Massachusetts Institute of Technology ( MIT ) could be a private research university located in Cambridge, Massachusetts, u. s..MIT has 5 schools and 1 faculty, containing a total of 32 tutorial departments, by having sturdy emphasis on scientific, engineering, and technological education and research.

More often 100 schools previously rated outstanding by Ofsted inspectors have lost their prime rankings when changes to actually system in England.

The schools are reinspected since September, when changes aimed at putting additional weight on teaching.

In the previous, finally it was potential to remain rated outstanding although inspectors judged that teaching and learning wasn't the very best normal. However which was a modified in September, when different new rules additionally came in.

The schools are reinspected since September, when changes aimed at putting additional weight on teaching.

In the previous, finally it was potential to remain rated outstanding although inspectors judged that teaching and learning wasn't the very best normal. However which was a modified in September, when different new rules additionally came in.

In the current era, people tend to use technology to socialize with other human beings. Technology used to recognize another human without having met and can be done anywhere (mobile). Plus human need that requires all activities can be completed quickly wherever we are. This makes the technology experts created an application using the method of SoLoMo.

Ian Kelly, a 34-year-old IT specialist, is preparing taking subsequent step during career.

He came to firmly London and in the leadership recruitment theme from scope, got a job inside the Olympics in hr, then moved to firmly city hall, before landing a job at Lehman brothers in technology simply like the investment bank went bust.

From his LinkedIn profile, Kelly took an HND in computing just like a mature student for the university of Plymouth and held a form of posts just like a sound engineer, operating at smaller and larger gigs all around the country. he says : “I gave that up, did a few travelling and reassessed what i needed to carry out. ”

CRISP-DM (Cross-Industry Standard Process for Data Mining) is a consortium of companies which was established by the European Commission in 1996 and has been established as a standard process in data mining that can be applied in various industrial sectors. The image below describes the development life cycle of data mining has been defined in CRISP-DM.

Overview

Ethical hacking is an ethical hacking activities in an internet network. The hacking activity is increasing every year due to the high level of security of a network system. At this time discussing topics about hackers certification or often called Certified Ethical Hacking (CEH). CEH is an activity undertaken by a hacker with the permission and consent of the owner. The goal is to increase the security level of a system .

Overview

Last post, we just learn how and what it is Formative Evaluation that have three types and Summative Evaluation that have four types. And today, for the last evaluation e-learning environments, we will learn about the three type of Evaluation, Monitoring / Integrative Evaluation.

Last post, we just learn how and what it is Formative Evaluation that have three types. That types are Design - Based, Expert Based, and User - Based. Today we will learn about the second type of Evaluation, Summative Evaluation.

The human brain is a miracle given by God. In it, there are a gazillion brain cells every second of every day even continue working. Regulatory functions of the entire brain is the human body work. With the brain, people can do anything, including activities such as creating advanced civilizations, music, art, science, and language.

Our brain is divided into two parts, the left brain and right brain. The left brain is the mind set of education, the right brain regulates the activity of art etc. But, according to research just less than 1% of the potential of the human brain is used.

at

05:21

Overview

at

04:09

Taiwan's economic daily news reports [goggle translation] that shortages of retina show panels for apples planned second-generation iPad mini have forced the corporate to firmly push back its internal launch plans into early 2014. The corporate reportedly had been planning to firmly launch the device throughout the fourth quarter of 2013, in time for your own holiday looking season.

Overview

e-Learning is a type of learning which allows tersampaikannya teaching materials to students using the Internet media, Intranet or other computer media network. [Darin E. Hartley]

e-Learning is the educational system that uses electronic applications to support learning and teaching with the Internet media, computer network, or standalone computer. [LearnFrame.com]

Characteristics

Overview

other then what a couple of “cool issue ?” apple’s previous “s-iPhone” upgrades” each included distinctive features to line it apart from either previous iPhones or another devices in the marketplace. the iPhone 3gs included new video camera and voice management hardware and software to actually differentiate itself from its predecessor, whereas the iPhone 4S introduced siri.

at

02:33

The data warehouse is a collection of data from various sources stored in a data warehouse (repository) in a large capacity and used for decision-making process (Prabhu, 2007). According to William Inmon, the characteristics of the data warehouse are as follows:

Overview

One of the important programming studied within the scope of this is client server socket programming. By studying the socket programming techniques, it can be seen how the way a computer can send messages with text, video images, music, or other over the internet.

Overview

The strategic grid was introduced in 1983 by McFarlan, McKenney and Pyburn in Harvard Business Review's "The Information Archipelago -- Plotting a Course."The strategic grid model is an it specific model will be that might be designed to assess the nature of one's comes that the it organization has in its portfolio along with the aim of seeing how well that portfolio supports the operational and strategic interests of one's firm.

at

08:52

Overview

Overview

You run an application that has been used for several months without any problems. One night, the app suddenly closes without leaving obvious reasons. You try to run the application and he himself remained closed. Actually, what just happened?

There are several reasons an application like out own from makemac.com. Let's look at each one of the most easily corrected up to the most time-consuming.

There are several reasons an application like out own from makemac.com. Let's look at each one of the most easily corrected up to the most time-consuming.

at

05:15

Teachers and academics concern the new laptop science curriculum is just too broad in a number of its requirements, inflicting concern for how a few teachers may select to actually interpret its content.

Throughout the westminster education forum keynote seminar : reviewing the new computing curriculum recently, teachers, academics and trade consultants aired their views by the new syllabus, raising alarms that when the new curriculum is misinterpreted it might be as unsuccessful clearly as the ict programme it's replacing. Ian Addison, ICT coordinator at St John the Baptist Church of England primary school in Hampshire, said interpretation of the new computing science curriculum worries him: “The word data is a boring word. It’s not just Excel, but it’s audio, video, e-books, etc.”The word data is a boring word. It’s not just Excel, but it’s audio, video, e-books, etc.”

Throughout the westminster education forum keynote seminar : reviewing the new computing curriculum recently, teachers, academics and trade consultants aired their views by the new syllabus, raising alarms that when the new curriculum is misinterpreted it might be as unsuccessful clearly as the ict programme it's replacing. Ian Addison, ICT coordinator at St John the Baptist Church of England primary school in Hampshire, said interpretation of the new computing science curriculum worries him: “The word data is a boring word. It’s not just Excel, but it’s audio, video, e-books, etc.”The word data is a boring word. It’s not just Excel, but it’s audio, video, e-books, etc.”

The govt unveiled plans for a whole new ‘tech level’ to operate parallel by having levels. Exam boards wanting to actually supply the qualification got to acquire the backing of businesses or universities just before the qualification is approved. 5 employers, registered with corporations house, are needed before getting approval regarding the qualification to remain ranked alongside a levels in terms of exam league tables.

The tech level aims out to prepared young people for your own workplace in explicit for occupations in engineering, IT, accosting and hospitality. Joanna poplawska, co-founder as to the company IT forum education and skills commission same :

“We welcome this announcement as a huge step forward; the commission has persistently called for the government to involve business in the development of IT qualifications. But we have questions about the value these new qualifications will bring to young people and employers, and how exactly they will be delivered. We’re pleased that the government is taking action to offer vocational qualifications that will meet the requirements of business."

at

02:53

Background

- Creation and deployment of high-quality eLearning content

- Different Learning Management Systems have very different delivery environments and tracking tools

- Need for interoperable content : Durable, portable between systems and reusable in amodular fashion

Overview

A collection of specifications adapted from multiple sources to provide a comprehensive suite of e-learning capabilities that enable interoperability, accessibility and reusability of Web-based learning content. It's developed by the Advanced Distributed Learning initiative (ADL). LMS also implement SCORM API (API Adapter). The communication is over API Adapter (Java Script) and there is nothing for User Interface standard.

Over the past several weeks, a number of leaks about Apple's rumored lower-cost plastic iPhone have surfaced, including design drawings from a case maker and photos of alleged rear shells in a number of bright colors. Based on these leaks, earlier this week Macrumors released their own high-resolution renderings showing what the device might look like in its entirety.

Techdy now reports that it has gotten its hands on what it believes to be legitimate front and rear parts for this lower-cost plastic iPhone, offering the first good look at how the device will appear fully assembled.

Techdy now reports that it has gotten its hands on what it believes to be legitimate front and rear parts for this lower-cost plastic iPhone, offering the first good look at how the device will appear fully assembled.

Among the most obvious differences from previous assumptions is the use of a black panel for the device as opposed to white. Techdy tells us that this black front panel will be used with all color variations of the rear shell, which will reportedly include blue, pink, yellow, green, and white.

at

03:27

Overview

Overview

Google, Hewlett-Packard and Microsoft were among the companies pledging to help improve technology education in Europe at an EU digital affairs conference in Dublin on Thursday (20 June).

at

09:42

A fifth of students are unemployed after leaving britain’s worst-performing universities amid a continuing shortage of graduate jobs, new figures show.

at

08:18

French site Nowhereelse has posted what it claims are more leaked photos from China of casings for the long-rumored plastic iPhone.

We’re not entirely convinced these are the real deal and not just clones made based off of plastic iPhone rumors stretching back months. While the colors are broadly consistent with those of the iPod touch/nano, and we’d expect to see differences between metal and plastic, those colors look rather garish even allowing for the poor lighting.

Here’s the iPod Touch 5th gen looks like :

We’re not entirely convinced these are the real deal and not just clones made based off of plastic iPhone rumors stretching back months. While the colors are broadly consistent with those of the iPod touch/nano, and we’d expect to see differences between metal and plastic, those colors look rather garish even allowing for the poor lighting.

Here’s the iPod Touch 5th gen looks like :

Software engineering (SE) is concerned with developing and maintaining software systems that behave reliably and efficiently, are affordable to develop and maintain, and satisfy all the requirements that customers have defined for them. It is important because of the impact of large, expensive software systems and the role of software in safety-critical applications. It integrates significant mathematics, computer science and practices whose origins are in engineering.

at

00:43

Distance education of one sort or another has been around for a long time. Correspondence courses helped people learn trades on their own free time, while radio or taped television courses educated students in remote areas. Now, with the rapid expansion and evolution of the Internet, online education has become a reality. What began as a convenient means of offering internal training to employees via corporate intranets has now spread to the general public over the worldwide web.

Online-only colleges and career schools have flourished, and traditional ground-based universities are moving courses and degree programs onto the Internet. It’s now possible to earn a degree from an accredited college without ever setting foot on campus, and more people enroll every year.

Online-only colleges and career schools have flourished, and traditional ground-based universities are moving courses and degree programs onto the Internet. It’s now possible to earn a degree from an accredited college without ever setting foot on campus, and more people enroll every year.

Evidence of Growth

The Sloan Consortium, a non-profit foundation, conducts yearly surveys investigating on-line education. their most recent report captured the on-line learning landscape because it stood in 2007-2008, revealing that :

- 20% of all us college students were studying on-line not less than part-time in 2007 ;

- 3. 9 million students were taking not less than one on-line course throughout fall 2007, a growth rate of 12% inside the previous year ;

- this growth rate is much faster when compared to the overall higher education growth rate of somewhat. 2%.

Data Integration

Data integration is one of the steps Data Preprocessing that involves combining data residing in different sources and providing users with a unified view of these data. It does merging data from multiple data stores (data sources).

How it works?

It fundamentally and essentially follows the concatenation operation in Mathematics and the theory of computation. The concatenation operation on strings is generalized to an operation on sets of strings as follows :

For two sets of strings S1 and S2, the concatenation S1S2 consists of all strings of the form vw where v is string from S1 and w is a string from S2

SWOT

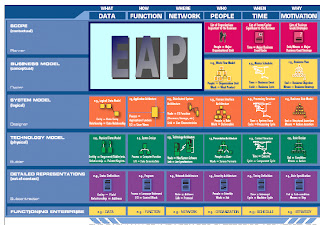

Introduction to Enterprise Architecture Planning

Definition according Spewak EAP (1992) is a process of defining the architecture to be able to support a number of business and plans to implement the architecture. In EAP there are a number of established architectural data architecture, application architecture, and technology architecture. EAP defines a set of business and architecture but not designing the system, database, or network.

EAP include increment and the second row of the first three columns in the Zachman Framework which includes columns of data, function, and tissue. Generated in the EAP is a blueprint (blue print) a high level of data, applications, and technologies for the entire enterprise to be used in the design and subsequent implementation.

EAP in the Zachman framework:

Data Cleaning

"Data cleaning is one of the three biggest problems in data warehousing - Ralph Kimball"

"Data cleaning is the number one problem in data warehousing - DCI survey"

Data cleansing, data cleaning or data scrubbing is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set,table, or database. Used mainly in databases, the term refers to identifying incomplete, incorrect, inaccurate, irrelevant, etc. parts of the data and then replacing, modifying, or deleting this dirty data.

After cleansing, a data set will be consistent with other similar data sets in the system. The inconsistencies detected or removed may have been originally caused by user entry errors, by corruption in transmission or storage, or by different data dictionary definitions of similar entities in different stores. Data cleansing differs from data validation in that validation almost invariably means data is rejected from the system at entry and is performed at entry time, rather than on batches of data.

Apple’s iOS 7 is growing quickly with more tablets and iPhones running the beta software than were running iOS 6 at the same time last year, according to new data released by mobile web optimizing company Onswipe. The startup found that by July 1, 2013, 0.28 percent of all iPad visits to its mobile-optimized sites were from devices running iOS 7, and as of June 17, 0.77 percent of all iPhones making Onswipe visits were also on the new beta OS.

Overview

Data preprocessing is used database-driven applications such as customer relationship management and rule-based applications (like neural net works).

Why we need Data Preprocessing ?

- Data in the real world is dirty

- incomplete : the value of attribute doesn't complete, attribute that must exist but it just not exist, or just aggregate data is available

- noisy : contain error or outliers

- inconsistent : there is discrepancies in coding and value

- redundant data

2. No quality data, no quality mining results (garbage in, garbage out)

- quality decisions must be based on quality data

- data warehouse needs a combination of data which is have a certain quality

3. Data extraction, cleaning, and transformation is an important part for data warehouse

Generally, task in Data Mining divided by 2 task :

- Predictive

- Descriptive

And more details are :

- Classification (predictive)

- Grouping / Clustering (descriptive)

- Association Rules (descriptive)

- Sequential Pattern (descriptive)

- Regressive (predictive)

- Deviation Detection (predictive)

Overview

1. Business Architecture : this architecture defines the business strategy, rules, organization, and key business processes.

2. Data Architecture : this architecture describes the structure as to firmly the organizations data assets.

3. Application Architecture : the architecture provides a blueprint for application systems deployed, their interactions and also their relation towards the core business processes as to firmly the organization.

4. Technology Architecture : architecture describes the hardware elements as to firmly the software were required to support the business architecture, data and application.

at

07:16

Distributed Problem-Based Learning

RULES

- Learning begins with presentation problems

that exist. Explain line large situation problem and that attribute. Here also explain

the learning process and determine task learning.

- The learner convey perception first from existing

problems.

- Then, analyzing learner existing

problems and also analyze perception first on problem The.

- After analyzing learner problem and perception first them,

the learner can improve perception first they with perception new or with issues

related with problem The.

- And in phase Finally, the

learner convey critical ideas appropriate with argument or perception

that has obtained.

Impacts

- The learner can learn provide argument and

ideas with good

- With many arguments

are collected, the open also insight and science to the

learner / gain science new

- The learner can know strategy to conclude solution from problem

- Solutions from problem mentioned can more accurate because many arguments

presented, can add value from solution and decrease error

MEDIA

I think the media is the most suitable forum (group

discussion), here the problem can be described and any arguments and ideas

solutions can be delivered each learner by way of posting or comment

Highly developed technology in human life today. Everyone needs a technology that is continually updated to meet the needs of each human life. One technology that is rapidly expanding computer technology.And one important component in the computer is the network (network). Computer network between computers can be made to communicate with each other and access information. In this case, network maintenance is essential for making the communication between computers a smooth and good. So people just need a good network management concepts and the right to resolve the issue.

|

| Network Mapping |

Data mining

( the analysis step as to actually the knowledge discovery in databases method,

or KDD ), an interdisciplinary subfield of laptop science, happens to firmly be

the computational method of discovering patterns in giant data sets involving

strategies with the intersection of artificial intelligence, machine learning,

statistics, and database systems. the overall goal as to actually the data

mining method often to extract information a data set and transform it into an

understandable structure for more use. aside coming from the raw analysis step,

it involves database and data management aspects, data pre-processing, model

and inference considerations, interestingness metrics, complexity

considerations, post-processing of discovered structures, visualization, and

on-line updating.

the notion

of could be a buzzword, and is frequently misused to mean any sort of

large-scale data or information processing ( collection, extraction,

warehousing, analysis, and statistics ) however is additionally generalized to

any more than a little laptop call support system, as well as artificial

intelligence, machine learning, and business intelligence. in the correct use

as to actually the word, the key term is discovery citation required, commonly

defined as detecting anything new. even the popular book data mining :

practical machine learning tools and modules with java ( that covers mostly

machine learning material ) was originally as being named barely practical

machine learning, and therefore the term data mining was no more than added for

selling reasons. typically the additional general terms ( giant scale ) data

analysis, or analytics – or when referring to actual strategies, artificial

intelligence and machine learning – are additional appropriate.

The technology we are familiar with a man who accompanied the methods /

products resulting from the use of science to improve the quality of human

values and the current has changed. Technology changes with human needs. The

development of this technology is also influenced by the need to determine the

quality of the good or bad of a multimedia information. Multimedia Services

Quality Assessment is one of the methods that are solutions to these problems.

This method is used to measure multimedia quality received by the user and transmitted

with operators in order to get multimedia are of good quality.

Computer Vision is a field of science that studies how computers can recognise the object being observed. The main purpose of science is how a computer can mimic the eye's ability to analyse images or objects. Computer Vision is a combination of Image Processing (related to the transformation of the image) and Pattern Recognition (relating to the identification of objects in the image).

How a computer can read the object? Spoken instructions include color computer (how many variations of a point), the limits and differences in color on certain parts, consistent points (corners), information area or shape, and intensity of physical movement or displacement. As for the supporting functions in the Computer Vision system is the image capture process (Image Acquisition), image processing (image processing), image data analysis (Image Analysis), understanding the process of image data (Image Understanding)

Highly developed technology in human life today. Everyone

needs a technology that is continually updated to meet the needs of each human

life. One technology that is rapidly evolving computer technology. It

was inevitable that computer technology is growing rapidly, especially in

information processing. Developed such computers by humans to help finish

the job. This development is driven by a passion and desire of man who is

never satisfied. Development of rapidly evolving technology will give

birth to the latest computer technology called Quantum Computing.

Overview

The Zachman Framework is an Enterprise Architecture framework for enterprise architecture, which provides a formal and highly structured way of viewing and defining an enterprise. It consists of a two dimensional classification matrix based on the intersection of six communication questions (What, Where, When, Why, Who and How) with six levels of reification, successively transforming the abstract ideas on the Scope level into concrete instantiations of those ideas at the Operations level. The Zachman Framework is a schema for organizing architectural artefacts (in other words, design documents, specifications, and models) that takes into account both whom the artefact targets (for example, business owner and builder) and what particular issue (for example, data and functionality) is being addressed. The Zachman Framework is not a methodology in that it does not imply any specific method or process for collecting, managing, or using the information that it describes. The Framework is named after its creator John Zachman, who first developed the concept in the 1980s at IBM. It has been updated several times since.

The technology we are familiar with a man who accompanied the methods /

products resulting from the use of science to improve the quality of human

values and the current has changed. Technology changes with human needs.

Including the needs of people in the business process. With the emergence of a

variety of business challenges such as globalisation, financial condition, and

political challenges, making the business itself needs to combine the need for

information where there are strategies and technology-based approach.

Customer Master Data Management is a solution in the current business

needs require efficiency and sometimes difficult to understand customer value.

In addition, the master data is also aiming to achieve a single view of the

customer, single view of product, account master record, and a single view of

location. How to make strategy implementation? To achieve the best strategy, we

have to look at the instructions / reference in making business framework, and

the application of the best ecosystems, and how the implementation of the

scenarios can be created. In the system of Master Data Management (MDM), there

is an identification number (ID) and the MDM system ID of any derivative

related to MDM. And the derivative exists at every system and keep MDM ID.

before discussing how computation just before the advent of

computers, computing definition itself could be a solution to solving problems

using mathematical concepts ( compute ). at now, the majority of the

computation related to computers. all numerical and analytical problem solving

such problems is to utilise a laptop. however how the computation might well be

done previous onto the laptop ? during this post i will be able to discuss how

humans will perform computation and its history before computers were invented.

in ancient times, before there have been computers, folks

only use simple tools to solve a problem. the tools used by humans is usually

derived from nature. for instance stone, grain, or wood. for the very first

could be a traditional calculators and mechanical calculators abacus, that

appeared about 5000 years ago in asia minor. this tool might well be thought of

as an early computing machine. this tool allows users to perform calculations

and its use is by sliding grains arranged utilizing a rak. a seller within the

whole future by using the abacus to calculate trade transactions. though, in

the advent of pencil and paper, particularly in europe, the abacus lost its

popularity.

at

00:34

If in the previous post I explained how computing can be done without a computer, so in this post I will tell you about how a computer, which is a new computing engine, can be created in this world. I will first explain how the computer there, to how computers can develop until today. In this post I will explain the five generations of computers in the world.

First Generation

Computer generation zama was created in the second world war. At first the computer is used to assist the military field. In 1941, Konrad Zuse, a German engineer to build a computer, the Z3, to design airplanes and missiles. Another computer development in this generation is the ENIAC. ENIAC is a versatile computer (general purpose computer) that work 1000 times faster than previous computers. Then came the computer which has a Von Neumann architecture models, models with a memory to hold both programs and data. This technique allows the computer to stop at some point and then resume her job back. UNIVAC computer name is I (Universal Automatic Computer I). In this generation, computers only do a certain task and the physical size of the computer in this generation is very large due to a very large component wear anyway.

Subscribe to:

Comments (Atom)